MLOps shouldn't stop at deployment

Make your machine learning efforts more reliable and build confidence in your deployed models with tools like enhanced outlier, adversarial and drift detection.

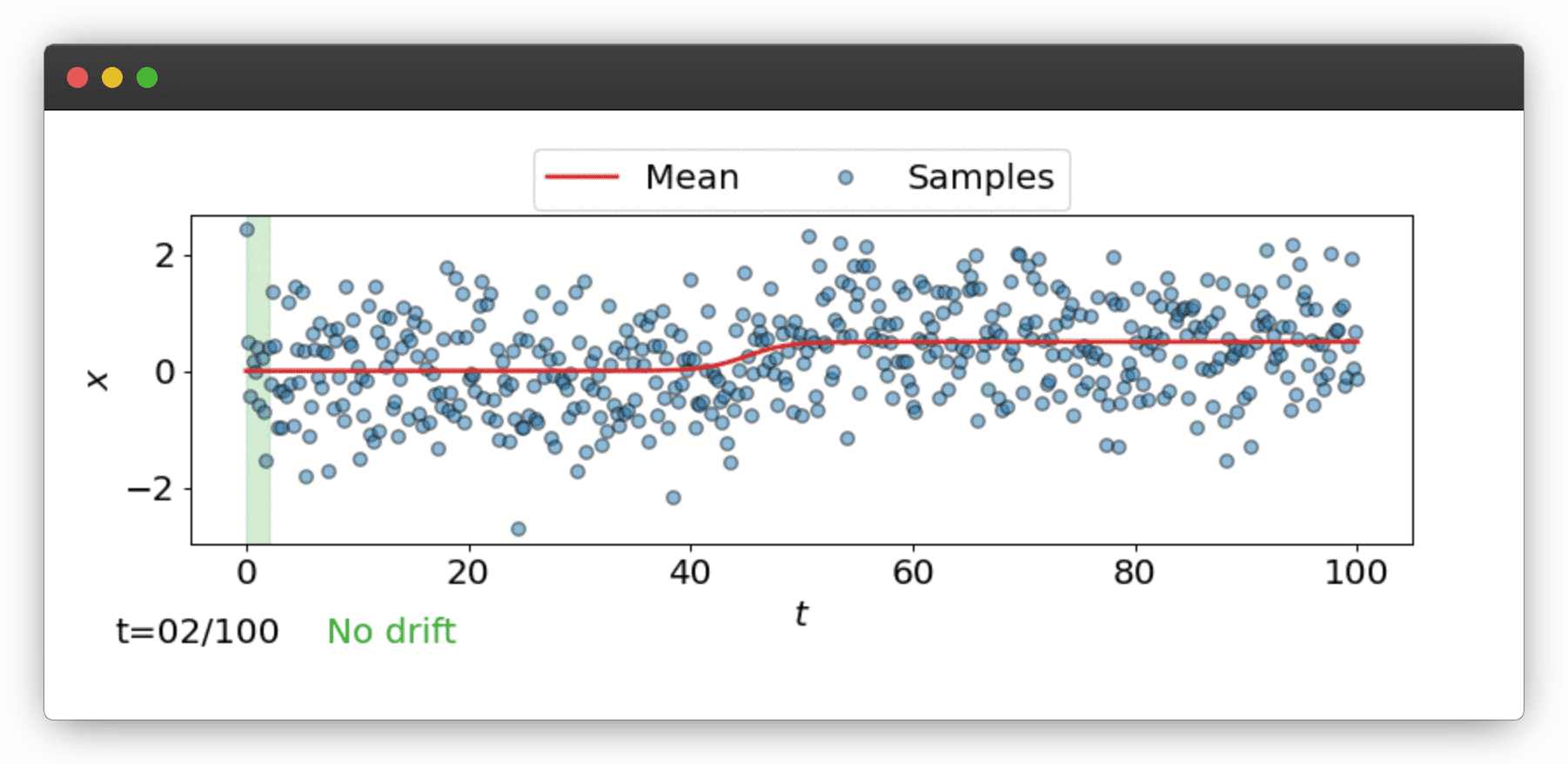

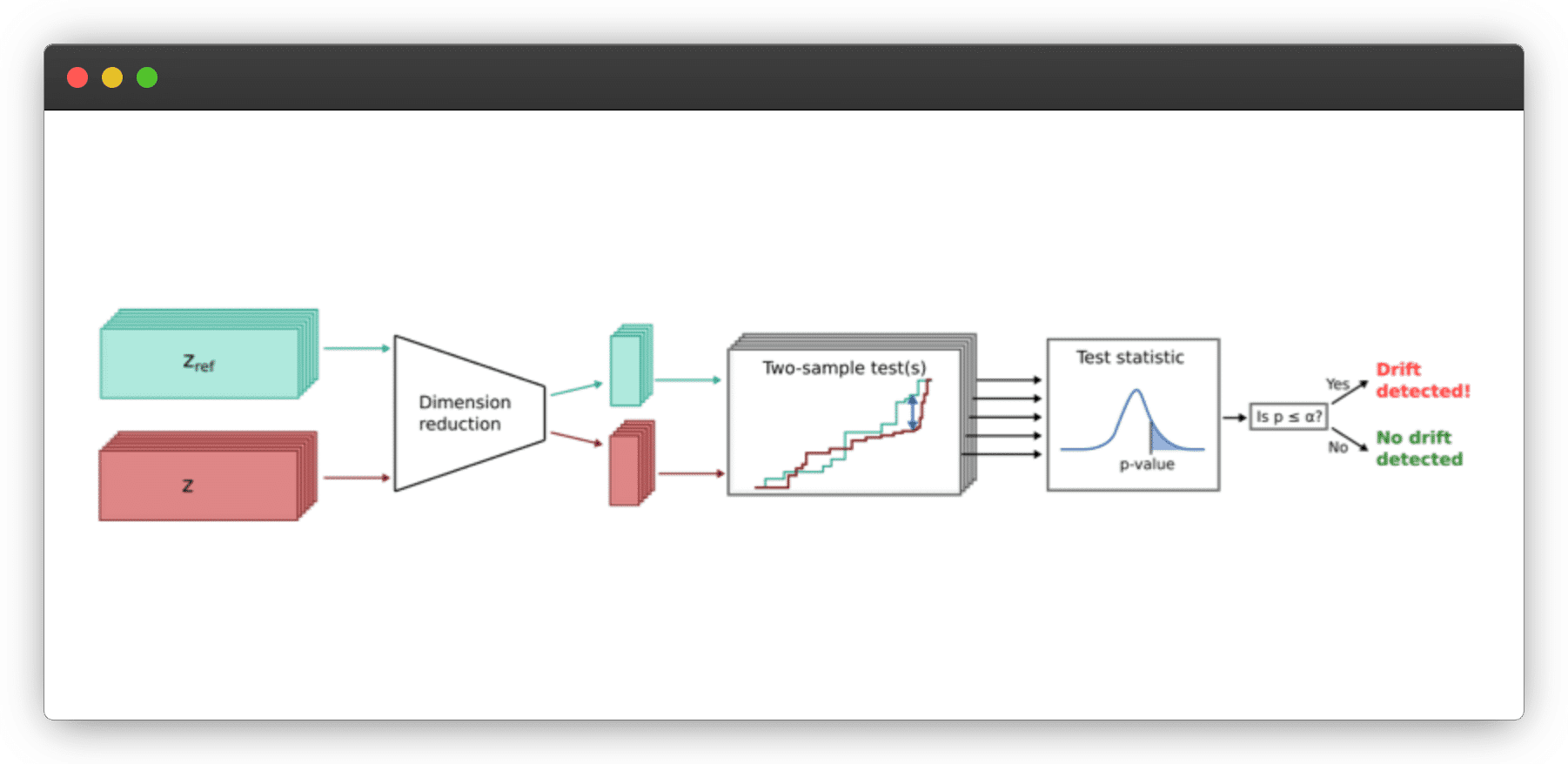

Take control of model performance with advanced drift detection

Notice changes in data dynamics & define whether detected drift will cause a decrease in model performance.

Discover critical anomalies in input and output data using outlier detection

Alert business units and users when seeing unexpected behavior.

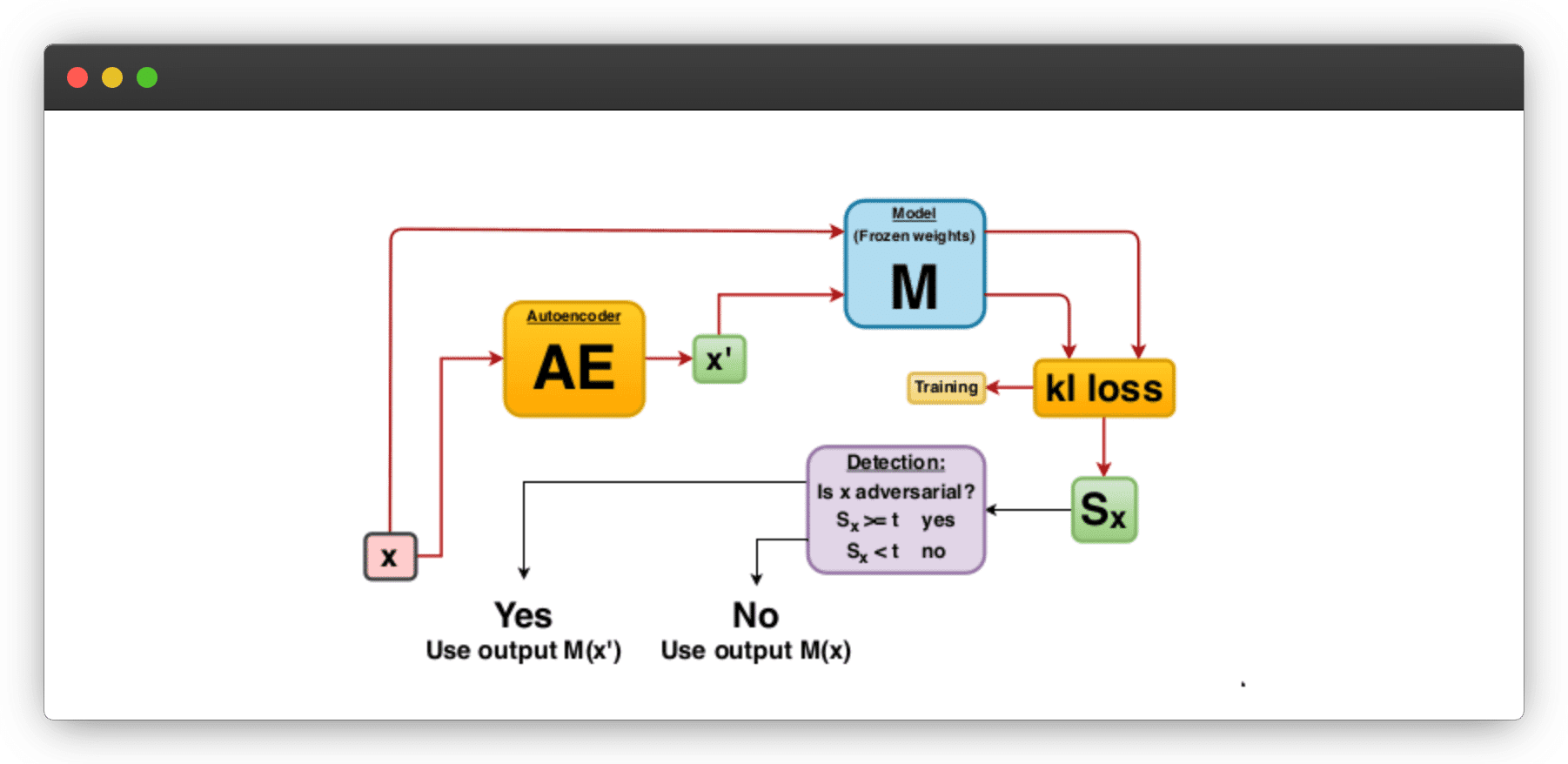

Adversarial detection ensures that models perform consistently

Return the score that indicates the presence of features & instances that trick the model outcome.

Start Now

Start using Alibi Detect today through GitHub. You’ll only need a license for production use. It’s free for all non-production and academic uses.

Features

Workflows

Front-end deployment of models, explainers and canaries means non-Kubernetes experts can deploy ML models and testing can be done in live environments.

Model management

Metrics and dashboards can monitor models to improve performance and rapidly communicate errors for easy debugging.

Model confidence

Model explainers mean you can understand and adjust what features are influencing the model and anomaly detection can flag drifts in data and alert users to adversarial attacks.

Stack stability

Backwards compatibility, rolling updates and full SLA alongside maintained integrations with all frameworks and clouds means a seamless install and reliable infrastructure.